import torch

from d2l import torch as d2l

from torch import nn

from torch.nn import functional as F

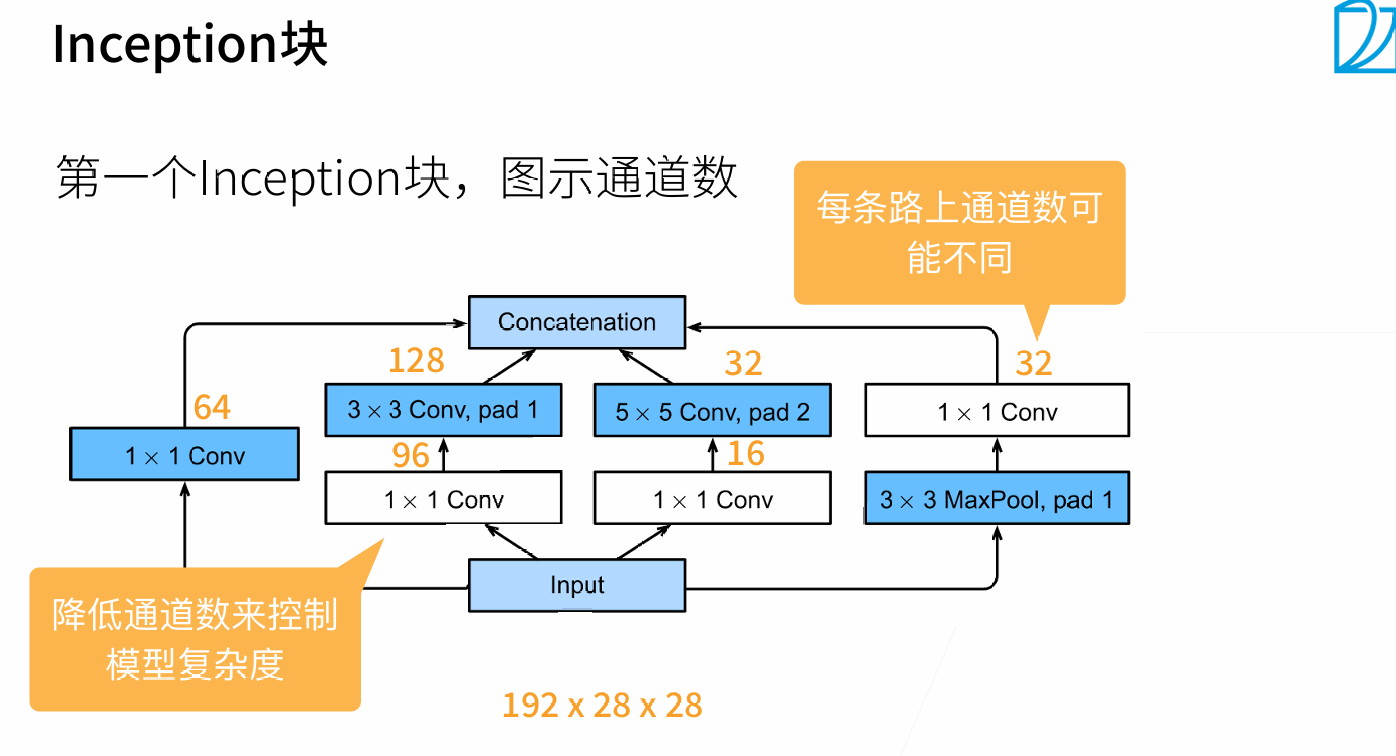

#1.Inception块

class Inception(nn.Module):#一个Inception块包含四条路径#in_chanels输入通道数,c1~c4表示每条路径上的输出通道数量,如果该路径上有多个卷积层,那输出通道应该是列表def __init__(self,in_chanels,c1,c2,c3,c4,**kwargs):super(Inception,self).__init__(**kwargs)#路径1:1*1卷积层self.p1_1=nn.Conv2d(in_chanels,c1,1)#路径2:1*1卷积->3*3卷积,padding=1self.p2_1=nn.Conv2d(in_chanels,c2[0],1)self.p2_2=nn.Conv2d(c2[0],c2[1],3,padding=1)#路径3:1*1卷积->5*5卷积,padding=2self.p3_1=nn.Conv2d(in_chanels,c3[0],1)self.p3_2=nn.Conv2d(c3[0],c3[1],5,padding=2)#路径4:3*3最大池化层padding=1,stride=1->1*1卷积self.p4_1=nn.MaxPool2d(3,padding=1,stride=1)self.p4_2=nn.Conv2d(in_chanels,c4,kernel_size=1)def forward(self,x):p1=F.relu(self.p1_1(x))p2=F.relu(self.p2_2(F.relu(self.p2_1(x))))p3=F.relu(self.p3_2(F.relu(self.p3_1(x))))p4=F.relu(self.p4_2(self.p4_1(x)))return torch.cat((p1,p2,p3,p4),dim=1)

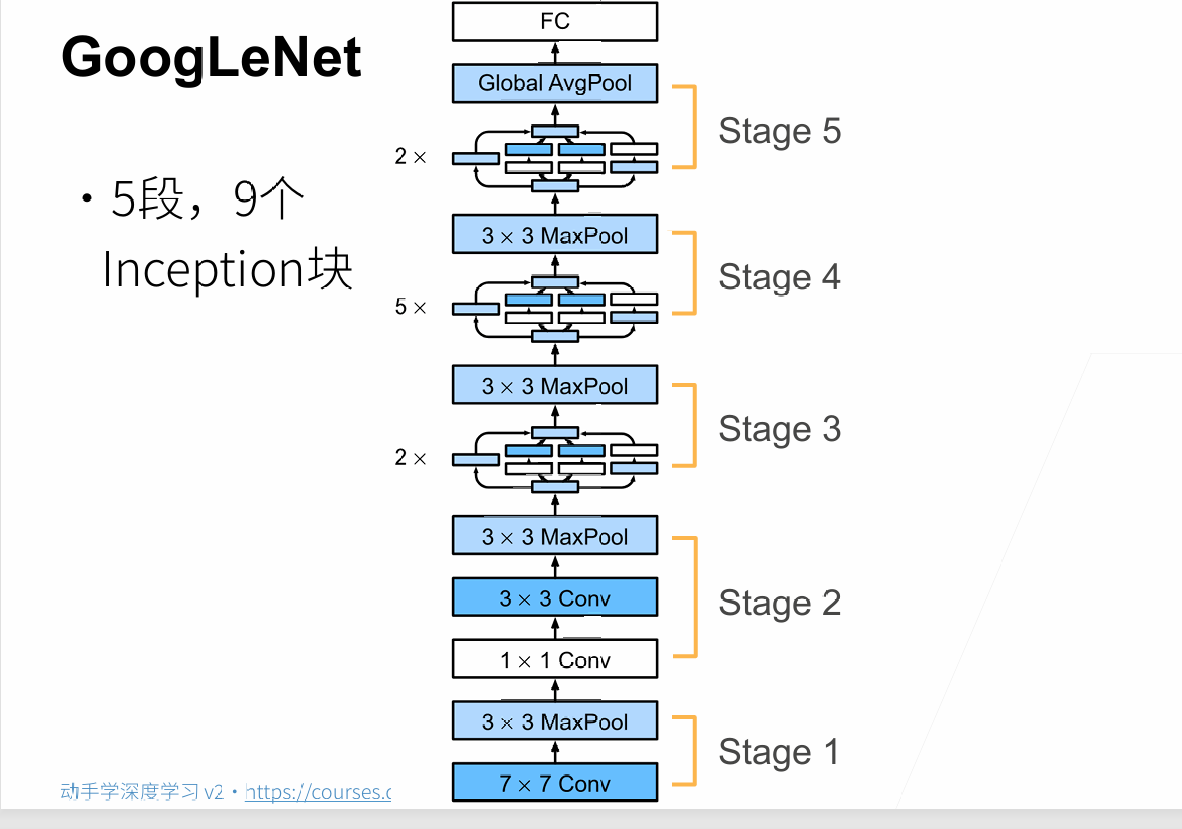

#每一个stage

#第一个stage

b1=nn.Sequential(nn.Conv2d(1,64,7,2,3),nn.ReLU(),nn.MaxPool2d(3,2,1)

)

#第2个stage

b2=nn.Sequential(nn.Conv2d(64,64,1),nn.ReLU(),nn.Conv2d(64,192,3,padding=1),nn.ReLU(),nn.MaxPool2d(3,2,1)

)

#Inception块数自己定

#第3个stage:2个Inception块和最大池化层

b3 = nn.Sequential(Inception(192, 64, (96, 128), (16, 32), 32),Inception(256, 128, (128, 192), (32, 96), 64),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

#stage4:5个Inception块,一个最大池化层

b4 = nn.Sequential(Inception(480, 192, (96, 208), (16, 48), 64),Inception(512, 160, (112, 224), (24, 64), 64),Inception(512, 128, (128, 256), (24, 64), 64),Inception(512, 112, (144, 288), (32, 64), 64),Inception(528, 256, (160, 320), (32, 128), 128),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

#stage5:2个Inception块,全局平均池化层AdaptiveAvgPool2d

b5 = nn.Sequential(Inception(832, 256, (160, 320), (32, 128), 128),Inception(832, 384, (192, 384), (48, 128), 128),nn.AdaptiveAvgPool2d((1,1)),nn.Flatten())

#最终输出通道数为10因为想做数字0~9的分类

net = nn.Sequential(b1, b2, b3, b4, b5, nn.Linear(1024, 10))

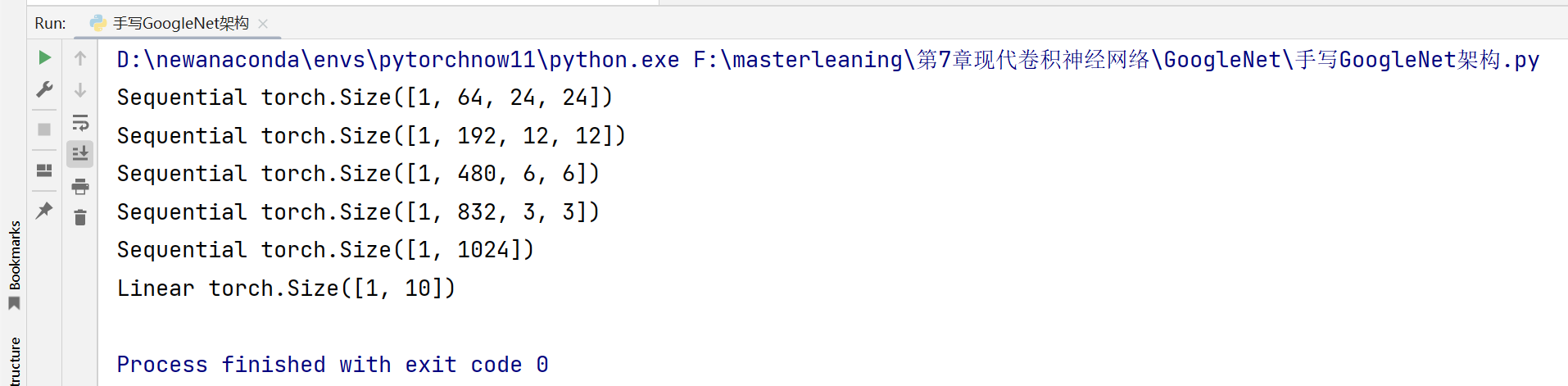

X=torch.rand((1,1,96,96))

for layer in net:X=layer(X)print(layer.__class__.__name__,X.shape)

![image]()

二.训练GoogleNet

import torch

from d2l import torch as d2l

from torch import nn

from torch.nn import functional as F

#1.Inception块

class Inception(nn.Module):#一个Inception块包含四条路径#in_chanels输入通道数,c1~c4表示每条路径上的输出通道数量,如果该路径上有多个卷积层,那输出通道应该是列表def __init__(self,in_chanels,c1,c2,c3,c4,**kwargs):super(Inception,self).__init__(**kwargs)#路径1:1*1卷积层self.p1_1=nn.Conv2d(in_chanels,c1,1)#路径2:1*1卷积->3*3卷积,padding=1self.p2_1=nn.Conv2d(in_chanels,c2[0],1)self.p2_2=nn.Conv2d(c2[0],c2[1],3,padding=1)#路径3:1*1卷积->5*5卷积,padding=2self.p3_1=nn.Conv2d(in_chanels,c3[0],1)self.p3_2=nn.Conv2d(c3[0],c3[1],5,padding=2)#路径4:3*3最大池化层padding=1,stride=1->1*1卷积self.p4_1=nn.MaxPool2d(3,padding=1,stride=1)self.p4_2=nn.Conv2d(in_chanels,c4,kernel_size=1)def forward(self,x):p1=F.relu(self.p1_1(x))p2=F.relu(self.p2_2(F.relu(self.p2_1(x))))p3=F.relu(self.p3_2(F.relu(self.p3_1(x))))p4=F.relu(self.p4_2(self.p4_1(x)))return torch.cat((p1,p2,p3,p4),dim=1)

#每一个stage

#第一个stage

b1=nn.Sequential(nn.Conv2d(1,64,7,2,3),nn.ReLU(),nn.MaxPool2d(3,2,1)

)

#第2个stage

b2=nn.Sequential(nn.Conv2d(64,64,1),nn.ReLU(),nn.Conv2d(64,192,3,padding=1),nn.ReLU(),nn.MaxPool2d(3,2,1)

)

#Inception块数自己定

#第3个stage:2个Inception块和最大池化层

b3 = nn.Sequential(Inception(192, 64, (96, 128), (16, 32), 32),Inception(256, 128, (128, 192), (32, 96), 64),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

#stage4:5个Inception块,一个最大池化层

b4 = nn.Sequential(Inception(480, 192, (96, 208), (16, 48), 64),Inception(512, 160, (112, 224), (24, 64), 64),Inception(512, 128, (128, 256), (24, 64), 64),Inception(512, 112, (144, 288), (32, 64), 64),Inception(528, 256, (160, 320), (32, 128), 128),nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

#stage5:2个Inception块,全局平均池化层AdaptiveAvgPool2d

b5 = nn.Sequential(Inception(832, 256, (160, 320), (32, 128), 128),Inception(832, 384, (192, 384), (48, 128), 128),nn.AdaptiveAvgPool2d((1,1)),nn.Flatten())

#最终输出通道数为10因为想做数字0~9的分类

net = nn.Sequential(b1, b2, b3, b4, b5, nn.Linear(1024, 10))

train_iter,test_iter=d2l.load_data_fashion_mnist(batch_size=128,resize=96)

lr=0.1

nums_echos=10

d2l.train_ch6(net,train_iter,test_iter,nums_echos,lr,d2l.try_gpu())