一 docker-compose 新增节点

# elasticsearchelasticsearch:image: elasticsearch:7.17.6container_name: elasticsearchports:- "9410:9410"- "9420:9420"environment:# 设置集群名称cluster.name: elasticsearch# 以单一节点模式启动discovery.type: single-nodeES_JAVA_OPTS: "-Xms512m -Xmx512m"volumes:- /root/docker/elk/elasticsearch/plugins:/usr/share/elasticsearch/plugins- /root/docker/elk/elasticsearch/data:/usr/share/elasticsearch/data- /root/docker/elk/elasticsearch/logs:/usr/share/elasticsearch/logsnetwork_mode: "host"kibana:image: kibana:7.17.6container_name: kibanaports:- "9430:9430"depends_on:# kibana在elasticsearch启动之后再启动- elasticsearchenvironment:#设置系统语言文中文I18N_LOCALE: zh-CN# 访问域名# SERVER_PUBLICBASEURL: https://kibana.cloud.com volumes:- /root/docker/elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.ymlnetwork_mode: "host"logstash:image: logstash:7.17.6container_name: logstashports:- "9440:9440"volumes:- /root/docker/elk/logstash/pipeline/logstash.conf:/usr/share/logstash/pipeline/logstash.conf- /root/docker/elk/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.ymldepends_on:- elasticsearchnetwork_mode: "host"

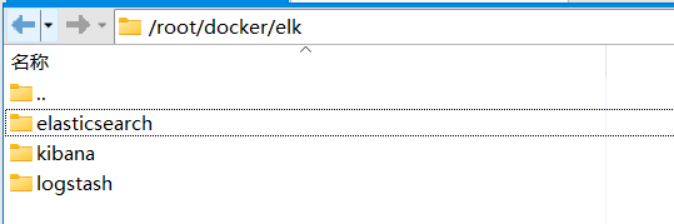

二 创建文件夹

Docker下创建elk及子文件夹

三 拷贝配置文件文件

1 拷贝kibana-es 的配置文件 /root/docker/elk/kibana/config/kibana.ymlserver.host: "0.0.0.0" server.shutdownTimeout: "5s" elasticsearch.hosts: [ "http://127.0.0.1:9200" ] monitoring.ui.container.elasticsearch.enabled: true2 拷贝logstash-es 的配置文件 /root/docker/elk/logstash/config/logstash.ymlhttp.host: "0.0.0.0" xpack.monitoring.elasticsearch.hosts: [ "http://127.0.0.1:9200" ]3 拷贝logstash-mysql 的配置文件/root/docker/elk/logstash/pipeline/logstash.confinput {jdbc {jdbc_connection_string => "jdbc:mysql://192.168.1.250:3306/kintech-cloud-bo?useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&autoReconnectForPools=true&noAccessToProcedureBodies=true&useSSL=false"jdbc_user => "root"jdbc_password => "Helka1234!@#$"jdbc_driver_library => "/app/mysql.jar"jdbc_driver_class => "com.mysql.cj.jdbc.Driver"statement => "SELECT * FROM bo_sop_content where update_time>:sql_last_value"schedule => "* * * * *"use_column_value => true#last_run_metadata_path = >"/usr/share/logstash/track_time"#clean_run => falsetracking_column_type => "timestamp"tracking_column => "update_time"} }output {elasticsearch {hosts => "192.168.1.247:9200"index => "bo_sop_content"} }

四 启动

#1 同时启动 elasticsearch kibana,但 logstash 需要单独启动 docker-compose up -d elasticsearch kibana#2 启动es 默认端口9200 docker run -d elasticsearch:7.17.6#3 启动kibana 默认端口5601 docker run -d kibana:7.17.6#4 启动logstash docker run -d \ -v /root/docker/elk/logstash/pipeline/logstash.conf:/usr/share/logstash/pipeline/logstash.conf \ -v /root/docker/elk/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml \ -v /root/lib/mysql.jar:/app/mysql.jar --name=logstash logstash:7.17.6